Asynchronous libraries performance

I committed next post on LShift's blog.

BTW. This is my 100th post in this blog!

I committed next post on LShift's blog.

BTW. This is my 100th post in this blog!

Posted by

majek

at

18:06

0

comments

![]()

Labels: Algorithms, Asychrony, Linux

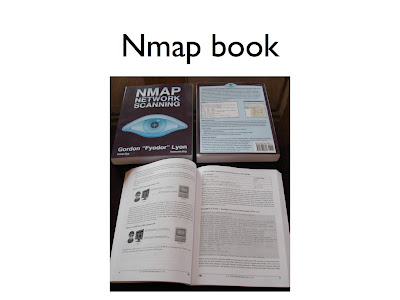

I'm really excited. After years of work Fyodor finally managed to finish The Book "Nmap Network Scanning". It should be a great gift for every security geek. You can preorder it on Amazon for 34$.

Posted by

majek

at

15:03

0

comments

![]()

$ wget http://www.apache.org/dist/incubator/qpid/M3-incubating/qpid-incubating-M3.tar.gzHmm. What’s next... No README files... After some googling I found that there’s getting stated guide. Following it this should be the magic command:

$ tar xvzf qpid-incubating-M3.tar.gz

$ cd qpid-incubating-M3/

$ cd java/brokerOkay, let’s look for the missing file somewhere:

$ PATH=$PATH:bin QPID_HOME=$PWD ./bin/qpid-server -c etc/persistent_config.xml

./bin/qpid-server: line 37: qpid-run: No such file or directory

$ find ../.. -name qpid-runNext try:

../../java/common/bin/qpid-run

$ cp ../../java/common/bin/qpid-run bin

$ PATH=$PATH:bin QPID_HOME=$PWD ./bin/qpid-server -c etc/persistent_config.xmlThat’s enough. I’m definitely too stupid to run this server and too busy to waste more time here (there is also other reason why I’m not really interested in qpid, read on).

Setting QPID_WORK to /home/majek as default

System Properties set to -Damqj.logging.level=info -DQPID_HOME=/home/majek/b/qpid-incubating-M3/java/broker -DQPID_WORK=/home/majek

Using QPID_CLASSPATH /home/majek/b/qpid-incubating-M3/java/broker/lib/qpid-incubating.jar:/home/majek/b/qpid-incubating-M3/java/broker/lib/bdbstore-launch.jar

Info: QPID_JAVA_GC not set. Defaulting to JAVA_GC -XX:+UseConcMarkSweepGC -XX:+HeapDumpOnOutOfMemoryError

Info: QPID_JAVA_MEM not set. Defaulting to JAVA_MEM -Xmx1024m

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/qpid/server/Main

Caused by: java.lang.ClassNotFoundException: org.apache.qpid.server.Main

$ python easy_install amqplibThe broker:

$ wget http://www.rabbitmq.com/releases/rabbitmq-server/v1.4.0/rabbitmq-server_1.4.0-1_all.deb

$ sudo dpkg -i rabbitmq-server_1.4.0-1_all.deb

~/amqplib-0.5/demo$ ./demo_send.py 1Most common way of using Barry’s library is to write software that only consumes data from AMQP. Use one event loop that blocks forever and when something happens on AMQP the callback is executed. I have slightly different use case. In the project I’m writing I use asynchronous programming. This means that I have one event loop that is not the AMQP event loop. I want to run AMQP stack every time something happened on a socket. This is how it would look like in pseudocode:

~/amqplib-0.5/demo$ ./demo_receive.py

while True:Qpid python client has only blocking interface, so it’s impossible to write code like that. Fortunately there’s a nonblocking client in Barry’s library. There’s even an example:

nonblocking_consume_amqp_events()

<magically_wait_for_activity_on_amqp_socket>

./nbdemo_receive.py

Traceback (most recent call last):

[...]

File "build/bdist.linux-i686/egg/amqplib/nbclient_0_8.py", line 74, in write

P' read_buf='&!amq.ctag-FC3ET7kTcqy/A93gJYQWqw==6!amq.ctag-FC3ET7kTcqy/A93gJYQWqw==myfan2

['1', '1']If the problem is with acknowledges, then one could say to use no_ack on a channel and just disable acknowledges. Sorry, this also doesn’t work.

['2', '1', '2']

['3', '1', '2', '3']

['4', '1', '2', '3', '4']

I send ten messages:While I was working at this issue I was worried that my Python process is eating more and more memory. After some debugging I discovered that amqplib has very nice memory leaks (okay, reference cycles).

['a_0', 'a_1', 'a_2', 'a_3', 'a_4', 'a_5', 'a_6', 'a_7', 'a_8', 'a_9']

Then another ten:

['b_0', 'b_1', 'b_2', 'b_3', 'b_4', 'b_5', 'b_6', 'b_7', 'b_8', 'b_9']

I receive:

['b_0', 'b_1', 'b_2', 'b_3', 'b_4', 'b_5', 'b_6', 'b_7', 'b_8', 'b_9',

'a_0', 'a_1', 'a_2', 'a_3', 'a_4', 'a_5', 'a_6', 'a_7', 'a_8', 'a_9',

'b_0', 'b_1', 'b_2', 'b_3', 'b_4', 'b_5', 'b_6', 'b_7', 'b_8', 'b_9']

def my_nb_callback(ch):

raise MException

conn = nbamqp.NonBlockingConnection('localhost',

userid='guest', password='guest',

nb_callback=my_nb_callback, nb_sleep=0.0)

ch = conn.channel()

ch.access_request('/data', active=True, read=True)

ch.exchange_declare('myfans', 'fanout', auto_delete=True)

qname, _, _ = ch.queue_declare()

ch.queue_bind(qname, 'myfans')

msgs = []

def callback(msg):

msgs.append( msg )

ch.connection.sock.sock.setblocking(False)

ch.basic_consume(qname, callback=callback)

while True:

msgs = []

<magically_wait_for_data_on_ch.connection.sock.sock>

try:

nbamqp.nbloop([ch])

except MException:

pass

unique_msgs_filter = {}

unique_msgs = []

for msg in msgs:

msg.channel.basic_ack(msg.delivery_tag)

if msg.body not in unique_msgs_filter:

unique_msgs_filter[msg.body] = True

unique_msgs.append(msg.body)

print '%r ' % (unique_msgs)

$ rabbitmqctl --helpWhich password would you like? Even if I know that, I’m not going to give any passwords away to see the help message, sorry. Next try:

Password:

$ rabbitmq-server --help

/usr/sbin/rabbitmq-server: 44: cannot create /var/log/rabbitmq/rabbit.log.bak: Permission denied

/usr/sbin/rabbitmq-server: 45: cannot create /var/log/rabbitmq/rabbit-sasl.log.bak: Permission denied

{error_logger,{{2008,11,21},{0,26,12}},"Protocol: ~p: register/listen error: ~p~n",["inet_tcp",einval]}

{error_logger,{{2008,11,21},{0,26,12}},crash_report,[[{pid,<0.22.0>},{registered_name,net_kernel},{error_info,{error,badarg}},{initial_call,{gen,init_it,[gen_server,<0.19.0>,<0.19.0>,{local,net_kernel},net_kernel,{rabbit,shortnames,15000},[]]}},{ancestors,[net_sup,kernel_sup,<0.9.0>]},{messages,[]},{links,[<0.19.0>]},{dictionary,[{longnames,false}]},{trap_exit,true},{status,running},{heap_size,377},{stack_size,21},{reductions,309}],[]]}

Posted by

majek

at

15:43

4

comments

![]()

Labels: Messaging, Performance

Few times I was pissed off by this screen: It means that I haven't activated Vista on time and Microsoft stopped liking me. The problem is that at least two times I was caught by this screen when I haven't got access to the net. I had password to WiFi, but haven't entered it yet. When Vista is blocked you don't have access to any networking settings so the password is useless. Yet another time, because of bug in Vista I needed to reenter my windows key. Of course the easiest way to get the key is to use KeyFinder rather than waste time on searching the key. Which, in the end, would probably be somewhere online - like in MSDNAA.

It means that I haven't activated Vista on time and Microsoft stopped liking me. The problem is that at least two times I was caught by this screen when I haven't got access to the net. I had password to WiFi, but haven't entered it yet. When Vista is blocked you don't have access to any networking settings so the password is useless. Yet another time, because of bug in Vista I needed to reenter my windows key. Of course the easiest way to get the key is to use KeyFinder rather than waste time on searching the key. Which, in the end, would probably be somewhere online - like in MSDNAA.

Fortunetely you can easily escape from the blocked Vista. This is how you can do it. After clicking "Activate now" this screen appears: Just choose "buy a new product key online", which would spawn a new browser window. I had Opera set as default browser, but any browser should work. The trick is to enter "c:\windows\system32\cmd.exe" as the url.

Just choose "buy a new product key online", which would spawn a new browser window. I had Opera set as default browser, but any browser should work. The trick is to enter "c:\windows\system32\cmd.exe" as the url. We want to run this magic program.

We want to run this magic program. Next, the well known black cmd dialog should appear. We're back home :) Just enter "explorer" to run the usual windows Start menu and task bar.

Next, the well known black cmd dialog should appear. We're back home :) Just enter "explorer" to run the usual windows Start menu and task bar. That's it. You now have normal access to your computer. You can then configure wireless networks or do whatever you like.

That's it. You now have normal access to your computer. You can then configure wireless networks or do whatever you like.

The only problem is to remember this instructions in case you won't have access to the internet.

Posted by

majek

at

19:16

0

comments

![]()

Labels: Security

At LShift blog I wrote about simple inter process locks in Python.

Posted by

majek

at

01:40

0

comments

![]()

In translation "A team of highly trained monkeys has been dispatched to deal with this situation."

I'm not the only one to see this message. I think it's even better than "you broke reddit" message.

Posted by

majek

at

13:53

0

comments

![]()

<form id='f' method="post" enctype="text/plain"The hardest part was to hide somewhere the equal sign from the syntax key=value that's used when encoding is text/plain. My code inserts equal sign into the song owner json field.

action="http://jukebox/rpc/jukebox" >

<input

name='{"version":"1.1","id":287,"method":"enqueue","params":["x'

value='x",[{"id":["jukebox@xxxx",[1,1,1]],"url":"http://[...]one%20more%20time.mp3","username":null}],false]}'>

</form>

<script>

f.submit()

</script>

Posted by

majek

at

02:29

0

comments

![]()

Labels: Security

The beginnings

>>> datetime.datetime.now()-dateutil.relativedelta.relativedelta(months=22)

datetime.datetime(2007, 1, 2)

Posted by

majek

at

04:00

0

comments

![]()

Labels: Idea

New, interesting technologies on the web are often criticized, sued and sometimes even ruined by the court rulings. There are a lot of lawsuits on new technologies.

New, interesting technologies on the web are often criticized, sued and sometimes even ruined by the court rulings. There are a lot of lawsuits on new technologies.

Let's bring a few important technologies that were(are?) considered illegal by some people:

Posted by

majek

at

21:48

1 comments

![]()

Labels: Idea

In this presentation I would like to talk about my adventure with extending Nmap and what I learned from it regarding asynchronous programming.

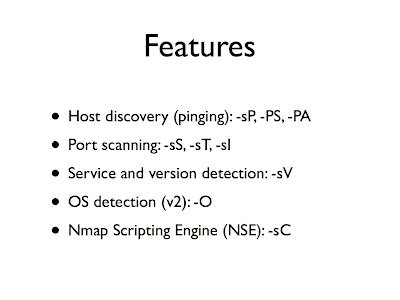

In this presentation I would like to talk about my adventure with extending Nmap and what I learned from it regarding asynchronous programming. Nmap is a port scanner with many other features.

Nmap is a port scanner with many other features.

On this slide you can see an example of Nmap output.

It shows open tcp ports on some target machine. It also shows service type (if it is ssh or http or something else) and version of a daemon running behind discovered port.

Nmap can execute user defined scripts for various services. Above we can see an example result of a script that retrieves HTML title from the HTTP server.

Nmap also tries to guess the operating system on the target machine. In the example output Nmap OS detection wasn't very successful, the OS guesses aren't accurate. Nmap isn't just a basic port scanner, it's rather fully-featured network discovery tool. It sends a lot of data to the target hosts and opens a lot of tcp/ip or udp connections to the target. To do this efficiently Nmap must be written in a specific way - in asynchronous manner, using nonblocking sockets where possible.

Nmap isn't just a basic port scanner, it's rather fully-featured network discovery tool. It sends a lot of data to the target hosts and opens a lot of tcp/ip or udp connections to the target. To do this efficiently Nmap must be written in a specific way - in asynchronous manner, using nonblocking sockets where possible.

Some parts of Nmap, like OS detection engine, are extremely sensitive for timings.

Nmap sends a lot of custom built packets. The system API for sending custom packets is called raw sockets. Unfortunately this sockets can't be used to listen for raw packets on the wire. To read packets Nmap uses libpcap (packet capture) library. Libpcap is a part of tcpdump (graphical interface is known as Wireshark).

When a program is opening pcap descriptor to sniff the network, it passes a BPF filter to receive only the packets that match the filter. The BPF filter is something like regular expression for packets. The filter is compiled and matched against all the packets that come through the wire. This works extremely fast for a single pcap socket, but it's not really created to handle thousands of open pcap descriptors. Every packet that will come to your machine will be matched against every open pcap descriptor, so opening too many pcap sockets may slow down the whole system.

Before going on, please make sure you know what a three-way handshake on tcp/ip connections is. Every Nmap scan is created from various stages.

Every Nmap scan is created from various stages.

First Nmap must know if the target hosts are really online. This is especially useful when you're scanning a wide range of ip addresses. We really don't want to waste time waiting for black-hole ip's. The detection if a host is up can be done using simple icmp ping packet, or using more complicated techniques.

After we know which hosts are up, Nmap does the real scanning. It sends a lot o SYN packets to discover which network ports are open or closed.

After we know which ports are open, we can start service and version detection phase. Nmap opens a lot of tcp/ip connections to detect what services and exactly which daemons are running there.

After this, Nmap can execute operating system detection, and try to guess which OS is running on a target host.

And finally Nmap can execute Nmap Scripting Engine, to grab even more detailed information about running services on open ports. In the first slide we've seen the results of a script that printed a title from html site grabbed from open http server. Most of people don't understand how Nmap's basic -sS SYN scanning works. This is really a port scanning kindergarden, so if you know how it works, you can skip this slide.

Most of people don't understand how Nmap's basic -sS SYN scanning works. This is really a port scanning kindergarden, so if you know how it works, you can skip this slide.

After the host discovery phase we know that a target host is online. Then Nmap tries to determine which tcp ports are open. To do this, Nmap sends a lot of SYN packets to target host via raw socket bypassing Operating System's networking stack.

If the remote port is open, scanning host receives SYN+ACK packet. But the tcp/ip connection wasn't actually established from scanning host OS – Nmap sent the SYN packet, not OS. OS doesn't recognize the SYN+ACK response packet, so it sends RST packet which tells a target to drop a connection.

Why to do scanning this way and not just open a lot of normal operating system tcp/ip connections? Normal tcp/ip connection would need to be closed gently (FIN flag), using even more packets. This SYN scan is faster, with only 3 packets needed to know if a port is opened or closed. I prefer this diagram over the previous slide. I would like to repeat that nmap injects first SYN packet on the wire. Than nmap sniffs for SYN+ACK packet on the wire.

I prefer this diagram over the previous slide. I would like to repeat that nmap injects first SYN packet on the wire. Than nmap sniffs for SYN+ACK packet on the wire.

Of course Nmap can scan many ports at the same time. We can say that Nmap first sends a lot of SYN packets and then it waits for the SYN+ACK or RST packets. If packet is received from the wire, than Nmap does something with it. For example updates data structures with received information. Then Nmap goes back to the main event loop and waits for yet another packet from the wire.

We can say that Nmap first sends a lot of SYN packets and then it waits for the SYN+ACK or RST packets. If packet is received from the wire, than Nmap does something with it. For example updates data structures with received information. Then Nmap goes back to the main event loop and waits for yet another packet from the wire. The point is that every Nmap stage is waiting for something different.

The point is that every Nmap stage is waiting for something different.

Host discovery phase can wait for icmp ping response packets or many other types.

Port scanning, as I mentioned in previous slides, is waiting for SYN+ACK or RST packets.

Service detection on the other hand is using a lot of normal tcp/ip or udp connections.

OS detection engine is waiting for a lot of magic packets.

Scripting Engine is also waiting for normal tcp/ip or udp connections.

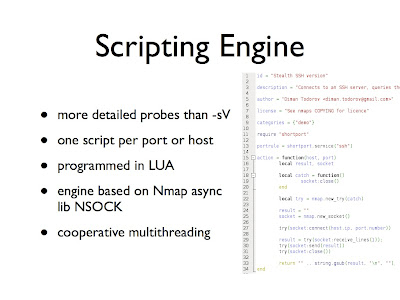

Let's now talk about Scripting Engine in details. Nmap Scripting Engine (NSE) is a new feature in Nmap. NSE was created because Nmap Service and Version detection wasn't accurate enough. Service detection is really very basic technology. It just grabs a banner from an open port and matches it against thousands of regular expressions.

Nmap Scripting Engine (NSE) is a new feature in Nmap. NSE was created because Nmap Service and Version detection wasn't accurate enough. Service detection is really very basic technology. It just grabs a banner from an open port and matches it against thousands of regular expressions.

This is enough for detecting most daemons, but it's not working for more complicated things. For example Skype client opens tcp port. This port returns valid answers for http queries, so it was detected as a http server. But Skype is not an http server. We weren't able to distinguish that before NSE.

The technology behind scripts is not complicated. A script is written sequentially in LUA – convenient simple higher level scripting language.

After normal scanning Nmap discovers which scripts should be executed and runs them against open ports. A script usually is opening a network socket and it writes/receives data from it. If a script is waiting for something on a network socket, it's froze and suspended. When we receive something from a socket, we continue the script until it's suspended again (or exits).

This is called cooperative multitasking and it allows us to write scripts sequentially and execute a lot of them concurrently. Because all the scripts are mostly waiting for some data on a socket.

The scripting engine is based on Nmap asynchronous library NSOCK. This library is capable of waiting for many network descriptors at the same time. Like every asynchronous library NSock has a main loop in which it waits for descriptors. When something happens on a descriptor Nsock executes a specific callback for the descriptor.

Like every asynchronous library NSock has a main loop in which it waits for descriptors. When something happens on a descriptor Nsock executes a specific callback for the descriptor. Connecting this with LUA engine is pretty straightforward. When something happens on a descriptor, we just continue the LUA script that is waiting for data on this descriptor.

Connecting this with LUA engine is pretty straightforward. When something happens on a descriptor, we just continue the LUA script that is waiting for data on this descriptor. I thought that NSE is great, but writing scripts that can only wait for normal tcp ip/udp connections is boring. I thought that it would be great to do something on lower level, like injecting custom packets into the tcp/ip connections, or listening to raw packets from the target host.

I thought that NSE is great, but writing scripts that can only wait for normal tcp ip/udp connections is boring. I thought that it would be great to do something on lower level, like injecting custom packets into the tcp/ip connections, or listening to raw packets from the target host.

That's why I created a raw sockets/libpcap support for NSE. It turned out to be rather complicated. Adding pcap support for Nsock was only part of success. The idea of the changes is rather clear, in one event loop we need to wait for both network descriptors and in the same moment for pcap descriptors. Internally it's rather complicated because of libpcap limitations.

Adding pcap support for Nsock was only part of success. The idea of the changes is rather clear, in one event loop we need to wait for both network descriptors and in the same moment for pcap descriptors. Internally it's rather complicated because of libpcap limitations.

As the result when a packet is received from libpcap descriptor, a callback is called. Just like with normal network sockets. Connecting this to the Lua engine wasn't easy. The main problem is that, as I mentioned before, pcap descriptors are rather heavy. So one pcap descriptor is opened from a script and shared between all the instances of the same script. This means that when a packet is received from a network we don't actually know which Lua thread should be woken.

Connecting this to the Lua engine wasn't easy. The main problem is that, as I mentioned before, pcap descriptors are rather heavy. So one pcap descriptor is opened from a script and shared between all the instances of the same script. This means that when a packet is received from a network we don't actually know which Lua thread should be woken.

I needed to create a custom dispatcher of packets, to know which thread to wake up. Finally I was able to open pcap descriptors and send raw packets from Lua scripts. I think that the possibilities of such scripts are huge. Here are some examples of my scripts.

Finally I was able to open pcap descriptors and send raw packets from Lua scripts. I think that the possibilities of such scripts are huge. Here are some examples of my scripts.

The first scripts is able to detect which hosts are using tcpdump in the local network. The script tries to determine if a host has a network card in promiscuous mode. I'd be happy to explain how it works, but that's not in the scope of this presentation. We can say that the script sends some custom arp-request packets and waits for arp-reply answers.

The next script is an implementation of Lcamtuf Otrace tool. It's a traceroute-like tool that can go through firewalls. It injects custom packets into valid tcp/ip connection with small TTL values, so that it can record the ip addresses of the routers on the way. Next script is an implementation of "ping -R" record route option. The record route option is a flag on ip packet that tells the routers on the way to record their ip addresses. The results are more accurate than normal traceroute but there are only 9 slots for ip addresses on an ip packet.

Next script is an implementation of "ping -R" record route option. The record route option is a flag on ip packet that tells the routers on the way to record their ip addresses. The results are more accurate than normal traceroute but there are only 9 slots for ip addresses on an ip packet.

My favorite script is an implementation of another Lcamtuf tool called p0f. P0f is a passive fingerprinting project. Basically p0f creates an operating system fingerprint from a single SYN packet. The fingerprint can tell which os the target host is running without sending any custom packets. It's stealth and passive.

I wanted to implement this tool, but Nmap is an active scanner. The idea was to open normal tcp/ip connection to the target and create a fingerprint based on SYN+ACK response. The results aren't very granular, but they can show many interesting things. In this slide you can see the results from some host. It seems obvious that port 22 is forwarded to another machine than ports 80 and 443. My latest work is an implementation of an active OS fingerprinting.

My latest work is an implementation of an active OS fingerprinting.

I think that nowadays, in the time of limited ip space, more and more ip addresses use port forwarding. It's rather common to see that one open port is forwarded from one physical machine and other ports are from different host.

I thought that it would be great to do an active OS detection for every port, rather than only for host. Normal Nmap OS detection can be only executed per host.

I implemented a bit limited Nmap OS detection algorithm in LUA, so that you can try to detect operating system for every open tcp/ip port.

The results aren't perfect yet. This is because of a timing issues inside NSE engine. But they are more granular than basic p0f results. On this slide you can see the results from the same host as on previous slide. Now it's obvious that port 22 is from forwarded from Linux box, and port 80 and 443 are from Windows machine. Let's sum things up.

Let's sum things up.

I created a support for raw sockets/libpcap for NSE. This was intended as an additional feature, but it was possible to implement one of the most complicated parts of Nmap using this feature - an OS detection.

One could ask if it would be possible to implement all Nmap in Lua?

Well. First let's talk about some other issues.

Nmap has different event loop for every scanning stage. This is because of historical reasons - programmers didn't know how to wait for both network sockets and many pcap descriptors in one event loop. Libpcap API is rather complicated, and it's not easy to mix it with normal sockets. The problem I described can be generalized. Not only Nmap faces this issue.

The problem I described can be generalized. Not only Nmap faces this issue.

The point is that asynchronous programming fails if anything else than event loop is blocking. In Nmap the problem is with specific libpcap limitations, but other projects also face some blocking elements.

Web servers have problem with blocking stat(2) syscall. They use this call to check if a file was modified on the disk and needs to be reloaded. For example lighttpd uses a lot of complicated code to address this issue. Basically lighty opens many threads, one for every physical disk, in which it does blocking stat calls.

Web browsers share similar problem. If you open a file from a slow media, like cdrom, the whole browser stops for a while. This is because open(2) syscall blocks until the metadata is read from the media. I don't like when my browser halts because one tab is slower than the others.

There are also a lot of asynchronous programs where dns queries are done using normal operating system api, which is blocking. In the test environment the software runs perfect, because dns queries are fast, but in production the program fails to scale. In my opinion asynchronous programming should be easy and fun. Async libraries should hide low level problems from the programmer.

In my opinion asynchronous programming should be easy and fun. Async libraries should hide low level problems from the programmer.

There are a lot of async libraries, but in my opinion there's no complete solution yet.

That’s why my dream is to create yet-another-but-better asynchronous library.

Given such, perfect, async library Nmap could be rewritten in one event loop and possibly in higher level language. Fyodor, the author of Nmap created a book. It's still in beta, but I hope it will be in shops in few months. I highly recommend it for people interested in low level networking and Nmap.

Fyodor, the author of Nmap created a book. It's still in beta, but I hope it will be in shops in few months. I highly recommend it for people interested in low level networking and Nmap. Thanks! I haven't described how promiscuous node detection works and how my libpcap dispatcher for Lua works. I'd love to write about it some day.

Thanks! I haven't described how promiscuous node detection works and how my libpcap dispatcher for Lua works. I'd love to write about it some day.

Thanks to Andrzej Teleżyński for proof reading this post.

Posted by

majek

at

13:00

3

comments

![]()

I'll talk about my work on industrial robots. The robots belong to my university - PJIIT. Here you can see our two Motoman SK6 robots. They were bought by the Japanese government from a fund to support developing countries. The robots were produced in Japan, assembled in Sweden, serviced by Germany and located in Poland.

Here you can see our two Motoman SK6 robots. They were bought by the Japanese government from a fund to support developing countries. The robots were produced in Japan, assembled in Sweden, serviced by Germany and located in Poland.

They are created to do welding and painting in the industry. They have no feedback - they will just do everything to move to the destination point. No matter if there's someone's head in their way. So watch out, they really could kill. This is how our lab looks like today. Notice the big grey box on the left (and a part of another one on the right). It's the robots controller which is responsible for moving the robot. All software for the robots run on these controllers.

This is how our lab looks like today. Notice the big grey box on the left (and a part of another one on the right). It's the robots controller which is responsible for moving the robot. All software for the robots run on these controllers.

On this slide you can see how the robot welds something. The robot is moving to specific point in the space, it welds, then moves to another point and so on. It's doing the same thing all over again. It's similar with painting robots. They were once programmed to paint a thing and they are just repeating the movement for years. On the right you can see an example of a program for a robot. It's called a job. It's basically just a series of points to which robot shall move and some simple commands to enable or disable the welder or brush.

On this slide you can see how the robot welds something. The robot is moving to specific point in the space, it welds, then moves to another point and so on. It's doing the same thing all over again. It's similar with painting robots. They were once programmed to paint a thing and they are just repeating the movement for years. On the right you can see an example of a program for a robot. It's called a job. It's basically just a series of points to which robot shall move and some simple commands to enable or disable the welder or brush. This device is used to write jobs for robot. As you can see there are a lot of buttons on this console. Most of the buttons don't do anything useful. On the other hand there are a lot of cryptic shortcuts that are used quite often (like: press star button with X key).

This device is used to write jobs for robot. As you can see there are a lot of buttons on this console. Most of the buttons don't do anything useful. On the other hand there are a lot of cryptic shortcuts that are used quite often (like: press star button with X key). The problem is I don't need a repeatable job in my lab. I want the robot to do something dynamic. Grab something from the floor, catch a ball, give me a hand and so on. I don't want a robot to do the same, moves all over again. It's useless for me.

The problem is I don't need a repeatable job in my lab. I want the robot to do something dynamic. Grab something from the floor, catch a ball, give me a hand and so on. I don't want a robot to do the same, moves all over again. It's useless for me. The board is programmable and can be connected to the computer using serial link. The board has unusual Intel i960 processor.

The board is programmable and can be connected to the computer using serial link. The board has unusual Intel i960 processor. I've mentioned that the Turbo board is programmable. To write a software for it you needed to:

I've mentioned that the Turbo board is programmable. To write a software for it you needed to: The next thing I needed to do, was to move the compiler stack to Linux. I decompiled the commercial libraries on Smalltalk, tested various open libc libraries, found an old GCC version that supported this special processor and wrote my own linker scripts. Finally I was able to compile a software on Linux.

The next thing I needed to do, was to move the compiler stack to Linux. I decompiled the commercial libraries on Smalltalk, tested various open libc libraries, found an old GCC version that supported this special processor and wrote my own linker scripts. Finally I was able to compile a software on Linux. On the left side of the slide you can see a page from the documentation. It's in Japanese and yes, I don't speak Japanese either. Most of the docs are in English, but unfortunately the most interesting pars aren't.

On the left side of the slide you can see a page from the documentation. It's in Japanese and yes, I don't speak Japanese either. Most of the docs are in English, but unfortunately the most interesting pars aren't. Using a Turbo board we were able to control the robot only in a basic way, move to the point and stop there.

Using a Turbo board we were able to control the robot only in a basic way, move to the point and stop there. This wasn't end of our low level problems. To reduce the latency we needed a faster serial link. 19.2 kbps was too slow for us.

This wasn't end of our low level problems. To reduce the latency we needed a faster serial link. 19.2 kbps was too slow for us. This is how our communication looks like today. The robot emits 46.6kbps, than we convert the signal to industrial rs422 format and finally we use custom-built FTDI usb-to-serial converter.

This is how our communication looks like today. The robot emits 46.6kbps, than we convert the signal to industrial rs422 format and finally we use custom-built FTDI usb-to-serial converter. After more than 2 years of work we were able to compile software from Linux. We disassembled a lot of robot internals. I wasn't able to fix the half-duplex problem, but I created a software library that is a workaround for this issue.

After more than 2 years of work we were able to compile software from Linux. We disassembled a lot of robot internals. I wasn't able to fix the half-duplex problem, but I created a software library that is a workaround for this issue. It's time to say what we were able to build on the top of this low level software!

It's time to say what we were able to build on the top of this low level software! This project is one of the oldest. The idea is that to create a cube that a robot can grab from the floor and do something with it.

This project is one of the oldest. The idea is that to create a cube that a robot can grab from the floor and do something with it. For example handle it to the second robot. So that one robot can grab cubes and other can build something from them (for example a tower). When a tower crashes, the roles are swapped.

For example handle it to the second robot. So that one robot can grab cubes and other can build something from them (for example a tower). When a tower crashes, the roles are swapped. The cubes are located based on the information from the camera. This slide shows my work on the markers for the cubes. Red outline is computer generated.

The cubes are located based on the information from the camera. This slide shows my work on the markers for the cubes. Red outline is computer generated. After the experiment with joystick I wondered if it's possible to create better interface for controlling the robot. I discovered the Haptic device, which is basically a 3d pointing device. It's used by multimedia people to edit 3d graphic scenes. I thought it should be perfect to control the robot.

After the experiment with joystick I wondered if it's possible to create better interface for controlling the robot. I discovered the Haptic device, which is basically a 3d pointing device. It's used by multimedia people to edit 3d graphic scenes. I thought it should be perfect to control the robot. Have you seen the Wiimote experiments by Johnny Chung Lee? He created 2d pointing device based on Wiimote infrared camera and an infrared diode.

Have you seen the Wiimote experiments by Johnny Chung Lee? He created 2d pointing device based on Wiimote infrared camera and an infrared diode. I thought that it would be great to use two Wiimotes to get 3d position of diode and connect it to the robot. I wanted to achieve similar experience as with the Haptic, but using less expensive hardware.

I thought that it would be great to use two Wiimotes to get 3d position of diode and connect it to the robot. I wanted to achieve similar experience as with the Haptic, but using less expensive hardware. Recently my friends, Aleksander Górski and Łukasz Hrynakowski, are working on a project, in which they put a SICK laser on the robot. The laser is basically a single infrared beam and a rotating mirror. Laser returns a distance from walls or other things in a plane. The idea is to move a laser from above to the ground and create a semi 3d view of a target thing, like a person.

Recently my friends, Aleksander Górski and Łukasz Hrynakowski, are working on a project, in which they put a SICK laser on the robot. The laser is basically a single infrared beam and a rotating mirror. Laser returns a distance from walls or other things in a plane. The idea is to move a laser from above to the ground and create a semi 3d view of a target thing, like a person. Some time ago some Google Street view cars were seen in Europe. It seems that Google is using similar SICK lasers.

Some time ago some Google Street view cars were seen in Europe. It seems that Google is using similar SICK lasers. And here you can see the results of scan. It's technically called 2.5d scan, because it's not full 3d, only 3d from one side.

And here you can see the results of scan. It's technically called 2.5d scan, because it's not full 3d, only 3d from one side. Finally a scan of faces of the two authors of the project and mine.

Finally a scan of faces of the two authors of the project and mine. That's it! I hope you learned something from this speech.

That's it! I hope you learned something from this speech.

Posted by

majek

at

12:51

2

comments

![]()

Labels: Motoman