Presentation: Nmap and asynchronous programming

In this presentation I would like to talk about my adventure with extending Nmap and what I learned from it regarding asynchronous programming.

In this presentation I would like to talk about my adventure with extending Nmap and what I learned from it regarding asynchronous programming. Nmap is a port scanner with many other features.

Nmap is a port scanner with many other features.

On this slide you can see an example of Nmap output.

It shows open tcp ports on some target machine. It also shows service type (if it is ssh or http or something else) and version of a daemon running behind discovered port.

Nmap can execute user defined scripts for various services. Above we can see an example result of a script that retrieves HTML title from the HTTP server.

Nmap also tries to guess the operating system on the target machine. In the example output Nmap OS detection wasn't very successful, the OS guesses aren't accurate. Nmap isn't just a basic port scanner, it's rather fully-featured network discovery tool. It sends a lot of data to the target hosts and opens a lot of tcp/ip or udp connections to the target. To do this efficiently Nmap must be written in a specific way - in asynchronous manner, using nonblocking sockets where possible.

Nmap isn't just a basic port scanner, it's rather fully-featured network discovery tool. It sends a lot of data to the target hosts and opens a lot of tcp/ip or udp connections to the target. To do this efficiently Nmap must be written in a specific way - in asynchronous manner, using nonblocking sockets where possible.

Some parts of Nmap, like OS detection engine, are extremely sensitive for timings.

Nmap sends a lot of custom built packets. The system API for sending custom packets is called raw sockets. Unfortunately this sockets can't be used to listen for raw packets on the wire. To read packets Nmap uses libpcap (packet capture) library. Libpcap is a part of tcpdump (graphical interface is known as Wireshark).

When a program is opening pcap descriptor to sniff the network, it passes a BPF filter to receive only the packets that match the filter. The BPF filter is something like regular expression for packets. The filter is compiled and matched against all the packets that come through the wire. This works extremely fast for a single pcap socket, but it's not really created to handle thousands of open pcap descriptors. Every packet that will come to your machine will be matched against every open pcap descriptor, so opening too many pcap sockets may slow down the whole system.

Before going on, please make sure you know what a three-way handshake on tcp/ip connections is. Every Nmap scan is created from various stages.

Every Nmap scan is created from various stages.

First Nmap must know if the target hosts are really online. This is especially useful when you're scanning a wide range of ip addresses. We really don't want to waste time waiting for black-hole ip's. The detection if a host is up can be done using simple icmp ping packet, or using more complicated techniques.

After we know which hosts are up, Nmap does the real scanning. It sends a lot o SYN packets to discover which network ports are open or closed.

After we know which ports are open, we can start service and version detection phase. Nmap opens a lot of tcp/ip connections to detect what services and exactly which daemons are running there.

After this, Nmap can execute operating system detection, and try to guess which OS is running on a target host.

And finally Nmap can execute Nmap Scripting Engine, to grab even more detailed information about running services on open ports. In the first slide we've seen the results of a script that printed a title from html site grabbed from open http server. Most of people don't understand how Nmap's basic -sS SYN scanning works. This is really a port scanning kindergarden, so if you know how it works, you can skip this slide.

Most of people don't understand how Nmap's basic -sS SYN scanning works. This is really a port scanning kindergarden, so if you know how it works, you can skip this slide.

After the host discovery phase we know that a target host is online. Then Nmap tries to determine which tcp ports are open. To do this, Nmap sends a lot of SYN packets to target host via raw socket bypassing Operating System's networking stack.

If the remote port is open, scanning host receives SYN+ACK packet. But the tcp/ip connection wasn't actually established from scanning host OS – Nmap sent the SYN packet, not OS. OS doesn't recognize the SYN+ACK response packet, so it sends RST packet which tells a target to drop a connection.

Why to do scanning this way and not just open a lot of normal operating system tcp/ip connections? Normal tcp/ip connection would need to be closed gently (FIN flag), using even more packets. This SYN scan is faster, with only 3 packets needed to know if a port is opened or closed. I prefer this diagram over the previous slide. I would like to repeat that nmap injects first SYN packet on the wire. Than nmap sniffs for SYN+ACK packet on the wire.

I prefer this diagram over the previous slide. I would like to repeat that nmap injects first SYN packet on the wire. Than nmap sniffs for SYN+ACK packet on the wire.

Of course Nmap can scan many ports at the same time. We can say that Nmap first sends a lot of SYN packets and then it waits for the SYN+ACK or RST packets. If packet is received from the wire, than Nmap does something with it. For example updates data structures with received information. Then Nmap goes back to the main event loop and waits for yet another packet from the wire.

We can say that Nmap first sends a lot of SYN packets and then it waits for the SYN+ACK or RST packets. If packet is received from the wire, than Nmap does something with it. For example updates data structures with received information. Then Nmap goes back to the main event loop and waits for yet another packet from the wire. The point is that every Nmap stage is waiting for something different.

The point is that every Nmap stage is waiting for something different.

Host discovery phase can wait for icmp ping response packets or many other types.

Port scanning, as I mentioned in previous slides, is waiting for SYN+ACK or RST packets.

Service detection on the other hand is using a lot of normal tcp/ip or udp connections.

OS detection engine is waiting for a lot of magic packets.

Scripting Engine is also waiting for normal tcp/ip or udp connections.

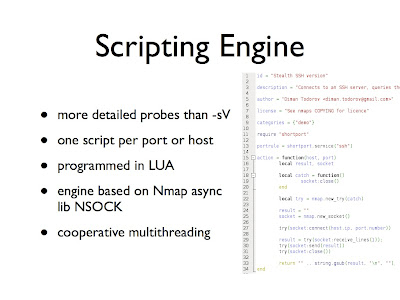

Let's now talk about Scripting Engine in details. Nmap Scripting Engine (NSE) is a new feature in Nmap. NSE was created because Nmap Service and Version detection wasn't accurate enough. Service detection is really very basic technology. It just grabs a banner from an open port and matches it against thousands of regular expressions.

Nmap Scripting Engine (NSE) is a new feature in Nmap. NSE was created because Nmap Service and Version detection wasn't accurate enough. Service detection is really very basic technology. It just grabs a banner from an open port and matches it against thousands of regular expressions.

This is enough for detecting most daemons, but it's not working for more complicated things. For example Skype client opens tcp port. This port returns valid answers for http queries, so it was detected as a http server. But Skype is not an http server. We weren't able to distinguish that before NSE.

The technology behind scripts is not complicated. A script is written sequentially in LUA – convenient simple higher level scripting language.

After normal scanning Nmap discovers which scripts should be executed and runs them against open ports. A script usually is opening a network socket and it writes/receives data from it. If a script is waiting for something on a network socket, it's froze and suspended. When we receive something from a socket, we continue the script until it's suspended again (or exits).

This is called cooperative multitasking and it allows us to write scripts sequentially and execute a lot of them concurrently. Because all the scripts are mostly waiting for some data on a socket.

The scripting engine is based on Nmap asynchronous library NSOCK. This library is capable of waiting for many network descriptors at the same time. Like every asynchronous library NSock has a main loop in which it waits for descriptors. When something happens on a descriptor Nsock executes a specific callback for the descriptor.

Like every asynchronous library NSock has a main loop in which it waits for descriptors. When something happens on a descriptor Nsock executes a specific callback for the descriptor. Connecting this with LUA engine is pretty straightforward. When something happens on a descriptor, we just continue the LUA script that is waiting for data on this descriptor.

Connecting this with LUA engine is pretty straightforward. When something happens on a descriptor, we just continue the LUA script that is waiting for data on this descriptor. I thought that NSE is great, but writing scripts that can only wait for normal tcp ip/udp connections is boring. I thought that it would be great to do something on lower level, like injecting custom packets into the tcp/ip connections, or listening to raw packets from the target host.

I thought that NSE is great, but writing scripts that can only wait for normal tcp ip/udp connections is boring. I thought that it would be great to do something on lower level, like injecting custom packets into the tcp/ip connections, or listening to raw packets from the target host.

That's why I created a raw sockets/libpcap support for NSE. It turned out to be rather complicated. Adding pcap support for Nsock was only part of success. The idea of the changes is rather clear, in one event loop we need to wait for both network descriptors and in the same moment for pcap descriptors. Internally it's rather complicated because of libpcap limitations.

Adding pcap support for Nsock was only part of success. The idea of the changes is rather clear, in one event loop we need to wait for both network descriptors and in the same moment for pcap descriptors. Internally it's rather complicated because of libpcap limitations.

As the result when a packet is received from libpcap descriptor, a callback is called. Just like with normal network sockets. Connecting this to the Lua engine wasn't easy. The main problem is that, as I mentioned before, pcap descriptors are rather heavy. So one pcap descriptor is opened from a script and shared between all the instances of the same script. This means that when a packet is received from a network we don't actually know which Lua thread should be woken.

Connecting this to the Lua engine wasn't easy. The main problem is that, as I mentioned before, pcap descriptors are rather heavy. So one pcap descriptor is opened from a script and shared between all the instances of the same script. This means that when a packet is received from a network we don't actually know which Lua thread should be woken.

I needed to create a custom dispatcher of packets, to know which thread to wake up. Finally I was able to open pcap descriptors and send raw packets from Lua scripts. I think that the possibilities of such scripts are huge. Here are some examples of my scripts.

Finally I was able to open pcap descriptors and send raw packets from Lua scripts. I think that the possibilities of such scripts are huge. Here are some examples of my scripts.

The first scripts is able to detect which hosts are using tcpdump in the local network. The script tries to determine if a host has a network card in promiscuous mode. I'd be happy to explain how it works, but that's not in the scope of this presentation. We can say that the script sends some custom arp-request packets and waits for arp-reply answers.

The next script is an implementation of Lcamtuf Otrace tool. It's a traceroute-like tool that can go through firewalls. It injects custom packets into valid tcp/ip connection with small TTL values, so that it can record the ip addresses of the routers on the way. Next script is an implementation of "ping -R" record route option. The record route option is a flag on ip packet that tells the routers on the way to record their ip addresses. The results are more accurate than normal traceroute but there are only 9 slots for ip addresses on an ip packet.

Next script is an implementation of "ping -R" record route option. The record route option is a flag on ip packet that tells the routers on the way to record their ip addresses. The results are more accurate than normal traceroute but there are only 9 slots for ip addresses on an ip packet.

My favorite script is an implementation of another Lcamtuf tool called p0f. P0f is a passive fingerprinting project. Basically p0f creates an operating system fingerprint from a single SYN packet. The fingerprint can tell which os the target host is running without sending any custom packets. It's stealth and passive.

I wanted to implement this tool, but Nmap is an active scanner. The idea was to open normal tcp/ip connection to the target and create a fingerprint based on SYN+ACK response. The results aren't very granular, but they can show many interesting things. In this slide you can see the results from some host. It seems obvious that port 22 is forwarded to another machine than ports 80 and 443. My latest work is an implementation of an active OS fingerprinting.

My latest work is an implementation of an active OS fingerprinting.

I think that nowadays, in the time of limited ip space, more and more ip addresses use port forwarding. It's rather common to see that one open port is forwarded from one physical machine and other ports are from different host.

I thought that it would be great to do an active OS detection for every port, rather than only for host. Normal Nmap OS detection can be only executed per host.

I implemented a bit limited Nmap OS detection algorithm in LUA, so that you can try to detect operating system for every open tcp/ip port.

The results aren't perfect yet. This is because of a timing issues inside NSE engine. But they are more granular than basic p0f results. On this slide you can see the results from the same host as on previous slide. Now it's obvious that port 22 is from forwarded from Linux box, and port 80 and 443 are from Windows machine. Let's sum things up.

Let's sum things up.

I created a support for raw sockets/libpcap for NSE. This was intended as an additional feature, but it was possible to implement one of the most complicated parts of Nmap using this feature - an OS detection.

One could ask if it would be possible to implement all Nmap in Lua?

Well. First let's talk about some other issues.

Nmap has different event loop for every scanning stage. This is because of historical reasons - programmers didn't know how to wait for both network sockets and many pcap descriptors in one event loop. Libpcap API is rather complicated, and it's not easy to mix it with normal sockets. The problem I described can be generalized. Not only Nmap faces this issue.

The problem I described can be generalized. Not only Nmap faces this issue.

The point is that asynchronous programming fails if anything else than event loop is blocking. In Nmap the problem is with specific libpcap limitations, but other projects also face some blocking elements.

Web servers have problem with blocking stat(2) syscall. They use this call to check if a file was modified on the disk and needs to be reloaded. For example lighttpd uses a lot of complicated code to address this issue. Basically lighty opens many threads, one for every physical disk, in which it does blocking stat calls.

Web browsers share similar problem. If you open a file from a slow media, like cdrom, the whole browser stops for a while. This is because open(2) syscall blocks until the metadata is read from the media. I don't like when my browser halts because one tab is slower than the others.

There are also a lot of asynchronous programs where dns queries are done using normal operating system api, which is blocking. In the test environment the software runs perfect, because dns queries are fast, but in production the program fails to scale. In my opinion asynchronous programming should be easy and fun. Async libraries should hide low level problems from the programmer.

In my opinion asynchronous programming should be easy and fun. Async libraries should hide low level problems from the programmer.

There are a lot of async libraries, but in my opinion there's no complete solution yet.

That’s why my dream is to create yet-another-but-better asynchronous library.

Given such, perfect, async library Nmap could be rewritten in one event loop and possibly in higher level language. Fyodor, the author of Nmap created a book. It's still in beta, but I hope it will be in shops in few months. I highly recommend it for people interested in low level networking and Nmap.

Fyodor, the author of Nmap created a book. It's still in beta, but I hope it will be in shops in few months. I highly recommend it for people interested in low level networking and Nmap. Thanks! I haven't described how promiscuous node detection works and how my libpcap dispatcher for Lua works. I'd love to write about it some day.

Thanks! I haven't described how promiscuous node detection works and how my libpcap dispatcher for Lua works. I'd love to write about it some day.

Thanks to Andrzej Teleżyński for proof reading this post.